Why Your LLM Needs MORE TOOLS Not More Training

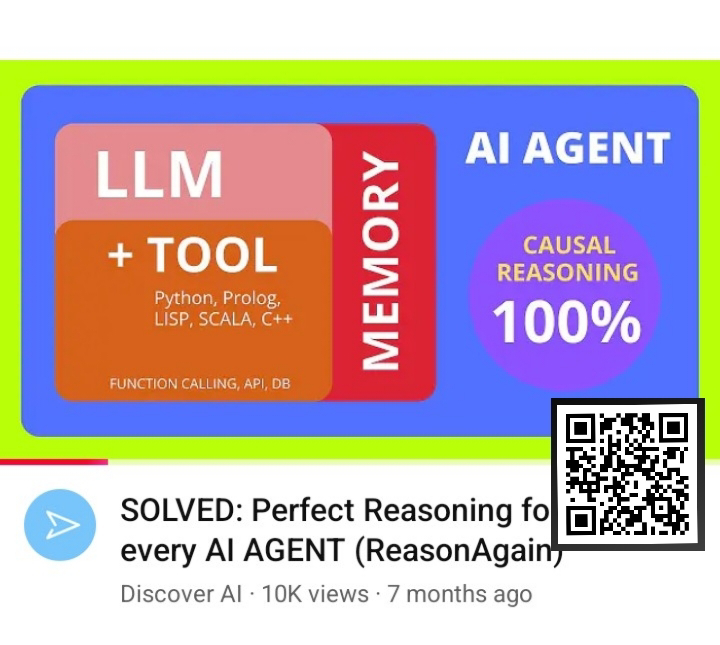

The 100% AI Reasoning Revolution: Why Your LLM Needs Tools, Not More Training

Large language models have dominated headlines with their impressive capabilities, but what if I told you that even the most powerful AI systems might be fooling us about their reasoning abilities? A groundbreaking research paper from the University of Pennsylvania, Microsoft Research, AMD, and Arizona State University reveals a startling truth: AI models may not actually understand logic the way we think they do.

The Illusion of Perfect Reasoning

Here's a simple test that exposes the problem. Ask ChatGPT: "Jane invests $1,000 at an interest rate of 5% per year. How much will her investment grow in three years?"

Most advanced LLMs will nail this question perfectly – 100% accuracy. But here's where it gets interesting.

The Perturbation Test

The researchers introduced what they call "perturbations" – slight modifications to the same problem. Instead of asking the question once, they asked it five times with different interest rates: 60%, 40%, 55%, 45%, and 35% per year.

The results were shocking. The same AI system that achieved 100% accuracy on the original question dropped to just 20% accuracy when faced with these variations. This suggests that rather than understanding the underlying mathematical logic, the AI was likely relying on memorized patterns or superficial shortcuts.

The Symbolic Code Solution

The breakthrough comes from transforming human language problems into symbolic code – essentially Python programs. Instead of hardcoding specific values like "$1,000" and "5%," researchers created reusable functions with variables.

Here's the magic: when you convert a word problem into a Python function, you remove the ambiguity and force the system to work with pure logic. The Python interpreter handles the mathematical reasoning, while the LLM only needs to translate the human language into code.

Why This Changes Everything

This approach reveals something profound about AI reasoning. When we give AI systems the right tools – like access to a Python environment – even small, locally-run models with just 1 billion parameters can achieve 100% accuracy on logical reasoning tasks.

The key insight is this: instead of trying to cram all reasoning capabilities into the neural network itself, we should give AI systems access to the same tools humans use – calculators, programming languages, and logical frameworks.

Real-World Applications

This isn't just academic theory. The symbolic code approach works for:

- **Mathematical reasoning**: Converting word problems into executable functions

- **Logical deduction**: Transforming if-then statements into code logic

- **Financial calculations**: Automating complex investment scenarios

- **Scientific problems**: Using domain-specific computational tools

The Tool-Augmented Future

The research points toward a future where AI agents succeed not because they have massive parameter counts, but because they have access to the right tools for each job. A small, local AI model equipped with Python, database access, and internet connectivity can outperform much larger models operating in isolation.

This has profound implications for:

- **Cost efficiency**: Smaller models with tools cost less than massive cloud-based systems

- **Privacy**: Local models with tool access keep your data secure

- **Reliability**: Symbolic computation eliminates hallucination in logical tasks

- **Accessibility**: Powerful reasoning becomes available to anyone with basic hardware

The Methodology Behind the Magic

The "ReasonAgain" methodology developed by these researchers works by:

1. **Abstraction**: Converting natural language problems into symbolic representations

2. **Perturbation**: Testing the same logic with different parameters

3. **Verification**: Using code execution to ensure consistent reasoning

4. **Evaluation**: Measuring true understanding versus pattern matching

Limitations and Future Directions

The researchers acknowledge that this approach works best for problems that can be represented symbolically. However, the principles extend further than you might think:

- **Spatial reasoning**: Can be addressed through 3D modeling tools and computer vision

- **Causal reasoning**: Maps naturally to if-then logical structures

- **Complex scenarios**: Can be broken down into tool-addressable components

The Practical Takeaway

For developers and businesses, this research offers a clear message: stop trying to engineer perfect prompts for complex reasoning tasks. Instead:

1. Identify the core logical structure of your problems

2. Choose appropriate computational tools (Python, databases, APIs)

3. Use smaller, more efficient models as coordinators

4. Let specialized tools handle what they do best

Why This Matters Now

As AI systems become more prevalent in critical applications – finance, healthcare, engineering – we need reasoning we can trust. The symbolic code approach provides transparency and reliability that pure neural network reasoning cannot guarantee.

The future of AI isn't about building bigger models that can do everything. It's about building smarter systems that know when to use the right tools for the job. Just like humans, the most capable AI systems will be those that combine natural intelligence with the right computational tools.

Conclusion

This research fundamentally shifts how we think about AI capabilities. True artificial intelligence isn't about memorizing patterns – it's about genuine problem-solving through the intelligent use of available tools. And that future is available today, even on your local hardware.

The path to 100% reasoning accuracy isn't through bigger models or better prompts – it's through better tools and smarter system design. The AI revolution isn't just about what our models can learn; it's about what they can do when equipped with the right instruments for the task at hand.

Comments

Post a Comment