Understanding The Grokking Phenomenon in LLMs

From Overfitting to Generalization: Understanding the "Grokking" Phenomenon

Grokking is a fascinating neural network behavior where models suddenly achieve perfect generalization after extended training, well beyond the point of overfitting. This phenomenon, identified by researchers at OpenAI, challenges conventional wisdom about machine learning and offers new insights into how neural networks learn. Let me break down this intriguing concept.

What is Grokking?

Grokking occurs when a neural network:

1. Initially overfits training data completely (100% training accuracy)

2. Shows poor validation performance for an extended period

3. Suddenly "snaps" into perfect generalization after continued training

This behavior was observed on algorithmic tasks involving binary operations, where models were trained to learn rules like modular addition or permutation composition.

The Key Observation

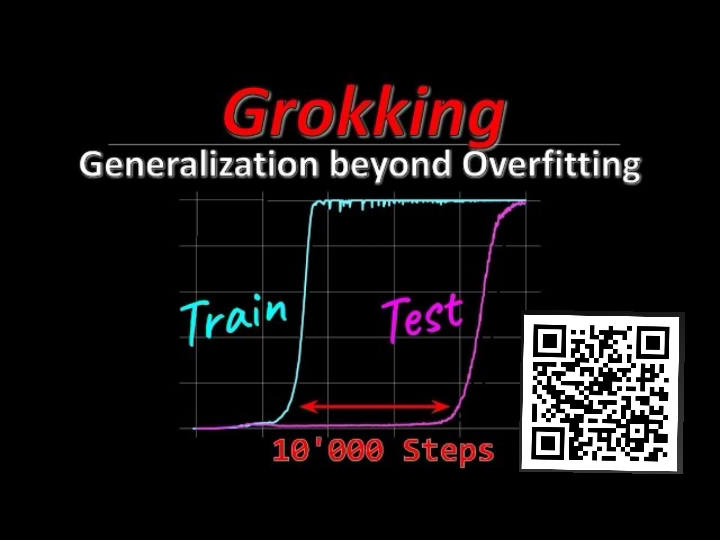

The most striking feature of grokking is visualized in the paper's signature graph:

- Training accuracy quickly reaches 100% (overfitting)

- Validation accuracy remains near zero for a long time

- After orders of magnitude more training steps, validation accuracy abruptly jumps to 100%

This challenges the standard practice of early stopping to prevent overfitting, suggesting that in some cases, continued training beyond apparent overfitting can lead to better generalization.

Relationship to Double Descent

Grokking appears related to the "double descent" phenomenon in deep learning:

- In double descent, as model complexity increases, validation performance first improves, then worsens (classic overfitting), then improves again with overparameterization

- Grokking shows a similar pattern but across training time rather than model size

Both phenomena suggest that neural networks can find generalizable solutions even in overparameterized regimes that classical learning theory would predict lead to overfitting.

Key Factors Influencing Grokking

The researchers identified several factors affecting when and how grokking occurs:

1. **Training data fraction**: More training data leads to faster grokking

2. **Weight decay**: Higher weight decay significantly accelerates grokking

3. **Operation symmetry**: Symmetric operations (like addition) grok faster than asymmetric ones

4. **Data noise**: Increasing noise makes grokking harder to achieve

Why Does Grokking Occur?

The paper doesn't definitively explain why grokking happens, but offers clues:

1. **Rule discovery vs. memorization**: As training progresses, the model transitions from memorizing data points to discovering the underlying rule

2. **Simplicity bias**: Weight decay encourages simpler solutions by penalizing large weights

3. **Structural representation**: Analysis shows that after grokking, weights organize into structures that encode the underlying operation rules

This suggests that neural networks, given enough time and the right regularization, can discover the simplest explanation for data—essentially implementing Occam's razor.

Implications and Future Directions

Grokking raises fascinating questions about neural network learning:

1. How might we identify when models are close to grokking?

2. Could we extract interpretable rules from networks that have grokked?

3. Does similar behavior occur in larger models on real-world data?

4. Can we use this knowledge to design better training procedures?

The phenomenon suggests that neural networks might be capable of more genuine "understanding" than previously thought, potentially extracting underlying rules from data without being explicitly designed to do so.

While still a work in progress when presented at ICLR 2021, this research opens exciting avenues for exploring how neural networks learn and generalize, particularly in domains where data follows clear but unspecified rules.

Comments

Post a Comment