Building an LLM Based Operationing System - A Step by Step Guide

Building an LLM-Based Operating System: A Step-by-Step Guide

Introduction to LLM-Based Operating Systems

The concept of an operating system (OS) centered around a large language model (LLM) is revolutionary. Instead of traditional kernels managing hardware resources, this LLM OS uses an LLM as its core, handling tasks through interactions and tools. This blog will guide you through creating a basic version of such an OS using the f data library and GPT-4.

Setting Up Your Environment

1. Install Docker

Docker is essential for running PostgreSQL as a vector database. Install Docker on your system:

- **Mac/Linux**: Use your package manager or download from [Docker's official site](https://www.docker.com/).

- **Windows**: Install Docker Desktop from the same site.

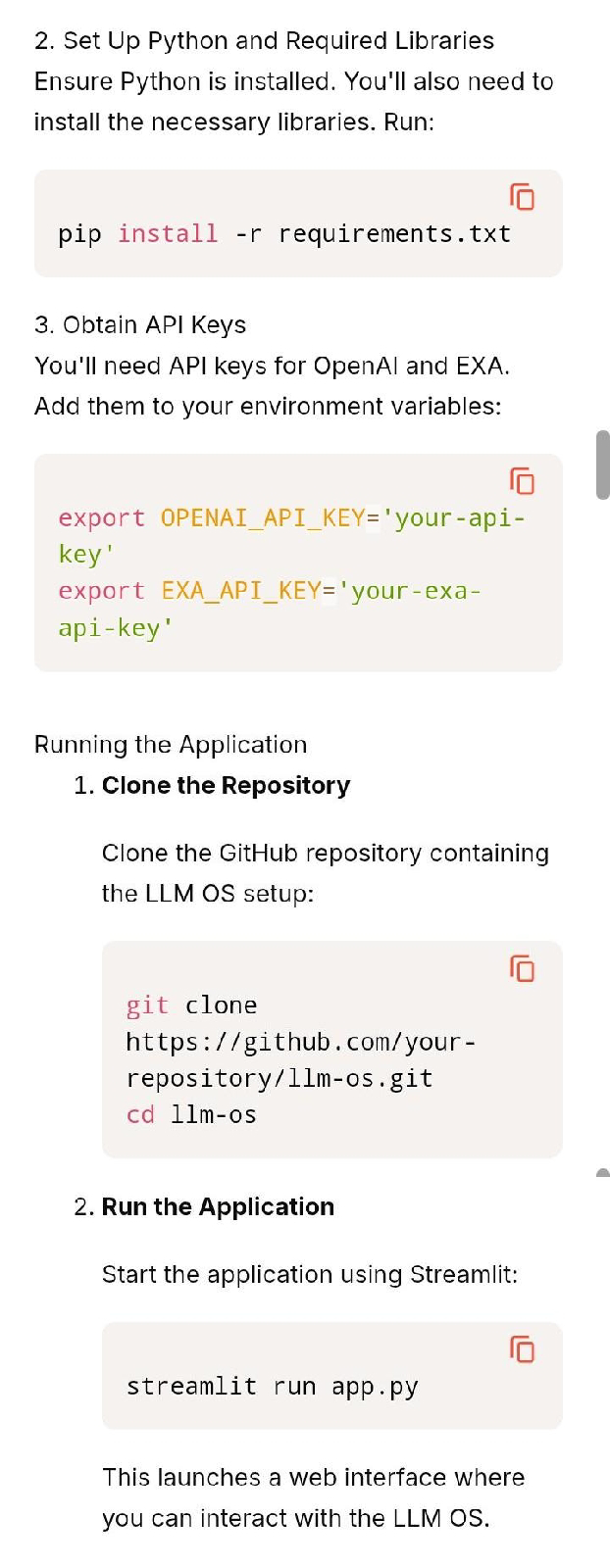

2. Set Up Python and Required Libraries

Ensure Python is installed. You'll also need to install the necessary libraries. Run:

```bash

pip install -r requirements.txt

```

3. Obtain API Keys

You'll need API keys for OpenAI and EXA. Add them to your environment variables:

```bash

export OPENAI_API_KEY='your-api-key'

export EXA_API_KEY='your-exa-api-key'

```

Running the Application

1. Clone the Repository

Clone the GitHub repository containing the LLM OS setup:

```bash

git clone https://github.com/your-repository/llm-os.git

cd llm-os

```

2. **Run the Application**

Start the application using Streamlit:

```bash

streamlit run app.py

```

This launches a web interface where you can interact with the LLM OS.

Exploring the Tools and Features

The LLM OS comes equipped with several tools:

- **Calculator**: Perform basic arithmetic operations.

- **File Tools**: Manage files and documents.

- **Web Search**: Use DuckDuckGo for internet searches.

- **Shell Commands**: Execute system commands.

- **Stock Analysis**: Generate investment reports using Yahoo Finance.

Adding Knowledge to the System

You can enhance the OS by adding external knowledge. For example, add a webpage:

1. Add a URL

Navigate to the knowledge section and enter a URL. The system processes and stores the information.

2. Query the Knowledge Base

Once added, you can query the stored knowledge. For instance, asking about a specific topic will retrieve relevant information from the added content.

The Role of Prompt Engineering

Effective interaction with the LLM OS relies on well-crafted prompts. For example, to generate an investment report:

```plaintext

Can you create an investment report on Tesla?

```

The system gathers data, processes it, and generates a structured report. This demonstrates the power of prompt engineering in shaping the OS's functionality.

Conclusion and Future Thoughts

Building an LLM OS is a glimpse into the future of computing, where LLMs could replace traditional kernels. This project showcases the potential of integrating AI into OS design. While it's still in its early stages, the possibilities are vast.

Would you like to see more projects like this? Let me know your thoughts in the comments!

---

This guide provides a foundational understanding of creating an LLM OS. With further development, such systems could become mainstream, redefining how we interact with computers.

Comments

Post a Comment